Architecting Next-Generation Enterprise Search: Deep Dive into Vertex AI Search

“The following is a deep-dive blog post detailing our recent customer-focused workshop on Architecting Next-Generation Enterprise Search with Vertex AI Search, part of the happtiq Innovation Lab series. Want to join our next sessions? Keep an eye out on your inbox for the upcoming invitations!”

Traditional enterprise search often relies on simple keyword matching. This has become a significant source of friction because it fails to interpret user intent. As a result, users are forced to navigate endless "10 blue links" to manually piece together an answer. This approach is costly, inefficient, and leads to direct business impact through poor employee and customer experiences. The solution to this lies in a shift toward AI-Native, Generative Search, specifically, the managed, enterprise-grade capabilities of Vertex AI Search (VAIS) on Google Cloud.

Let’s see where VAIS is positioned within the Google Cloud Generative AI stack:

Foundation Models: The core, powerful, but general-purpose intelligence, such as the Gemini models. They lack grounding in proprietary enterprise data, making them prone to "hallucination" on business-specific queries.

Vertex AI: A platform for building, tuning, and deploying custom models. Choosing this route for Retrieval-Augmented Generation (RAG) requires self-managing the entire pipeline (indexing, vector stores, scaling, LLM connection), a process that might be more complex and slow for specific teams and requirements.

AI-Native Services (VAIS): The fully-managed, turnkey solution for Enterprise Search and RAG. It abstracts the underlying Foundation Models and Vertex AI infrastructure, providing a complete RAG system with Google-quality search and grounded answers in days.

The VAIS RAG Pipeline: How it Works

VAIS moves beyond semantic search to deliver grounded, synthesized answers via a three-stage, end-to-end RAG architecture:

Ingest & Index: Connectors pull structured (BigQuery, APIs) and unstructured (PDFs, HTML, DOCX from Cloud Storage or websites) data. Content is chunked and converted into high-dimensional numerical vectors (semantic embeddings). VAIS supports real-time streaming ingestion for rapidly changing data.

Retrieve & Rank: A user's query is also embedded, and the system performs a semantic search to retrieve the most relevant data chunks (RAG). This step leverages advanced techniques like Query Rewriting (synonyms, spelling correction) and personalized, event-based re-ranking to optimize for relevance and KPIs.

Synthesize & Ground: The retrieved, top-ranked chunks, along with the original query, are sent to a Foundation Model (i.e., Gemini) to generate a final answer. Crucially, the answer is grounded in the source documents and includes citations for verifiability, eliminating hallucination.

Key Customization and Enterprise Features

VAIS offers extensive configuration to adapt to complex enterprise requirements:

Custom Ranking: Users can define their own ranking functions, combining factors like semantic similarity, keyword similarity, and conversion adjustments, applied on a per-query basis.

Metadata Filtering: Utilizing metadata (author, date, tags) added during data preparation, natural language queries can be translated into precise filters before indexing, significantly improving result accuracy.

Personalization: User events (search, click, conversion history) fuel event-based re-ranking, delivering highly relevant search results and powering related recommendations.

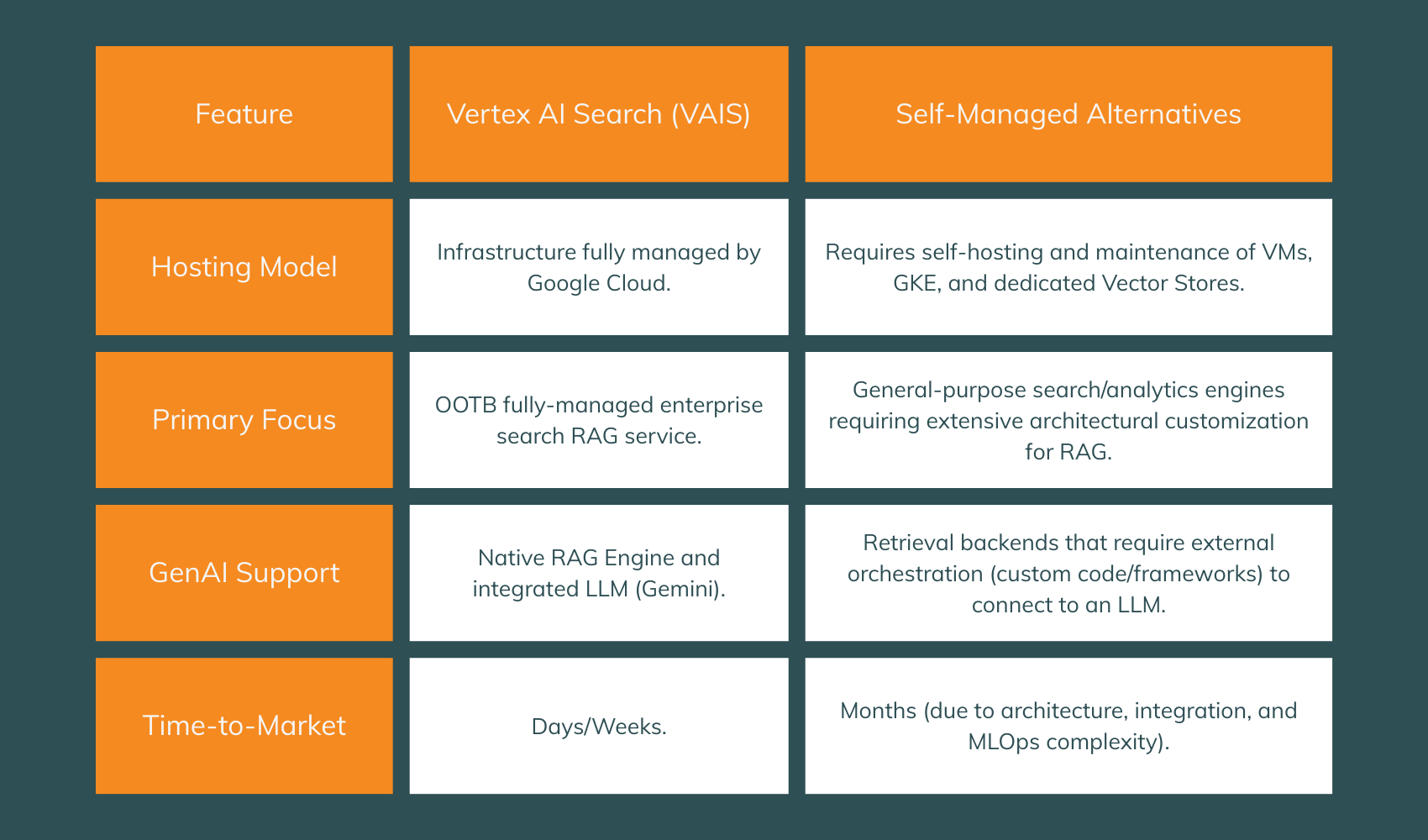

Architecture Comparison

VAIS differentiates itself from self-managed alternatives (e.g., distributed search engines requiring GKE or dedicated Vector Stores) primarily on the basis of operational complexity and time-to-market:

Integration and Best Practices

When it comes to integration, VAIS is highly flexible, supporting a REST API/Client Libraries for full programmatic control into any backend application, a No-Code UI Widget for simple portal deployments, and Chatbot Integration for grounding Dialogflow CX agents or custom conversational systems.

For a successful deployment of VAIS, like always, we advise to adhere to key best practices:

Data Preparation: Quality in, Quality out. Remove boilerplate, ensure text is machine-readable, and utilize metadata to enable powerful filtering and faceting.

Evaluation: Create a "Golden Set" of key questions and perfect answers for continuous testing. Measure performance metrics like "Zero-Result Rate" and use Groundedness Checks to ensure answers are supported by the cited sources.

Real-World Implementation

At happtiq, we are currently leveraging Vertex AI Search to solve high-value business challenges for our customers in two distinct environments:

Tourism & Hospitality (Intelligent Product Discovery)

We are replacing static search with a conversational, semantic discovery engine to transform the customer acquisition funnel. Integrating Vertex AI Search with the hotel inventory and user analytics pipeline enables dynamic, personalized recommendations. This system interprets user intent and behavior, in order to maximize conversion potential.

Media & News Publishing (Sales Enablement Agent)

To boost operational efficiency, we developed an internal "Sales Co-Pilot" for a major news publisher. This RAG-powered agent synthesizes complex inventory data to intelligently suggest optimal bundled offers, streamlining workflows and enabling the sales force to focus on closing deals by automating offer retrieval and configuration.

Summary

The evolution from keyword-based systems to a fully managed RAG architecture like Vertex AI Search is a shift in how organizations can put their most valuable data assets to work. By abstracting the significant MLOps and infrastructure complexity that comes with self-managed RAG, VAIS accelerates the time-to-value for delivering grounded, accurate, and personalized answers across the enterprise.

Ready to transform your enterprise's data accessibility?

Assess your organization's specific search and RAG requirements to understand how Vertex AI Search can reduce friction and deliver immediate business value. Contact us to explore a PoC and discuss your implementation roadmap.